Item Review Workflow for Exam Development

Item Review is the process of ensuring that newly-written test questions go through a rigorous peer review, to ensure that they are high quality and meet industry standards.

What is an item review workflow?

Developing a high-quality item bank is an extremely involved process, and authoring of the items is just the first step. Items need to go through a defined workflow, with multiple people providing item review. For example, you might require all items to be reviewed by another content expert, a psychometrician, an editor, and a bias reviewer. Each needs to give their input and pass the item along to the next in line. You need to record the results of the review for posterity, as part of the concept of validity is that we have documentation to support the development of a test.

What to review?

You should first establish what you want reviewed. Assessment organizations will often formalize the guidelines as an Item Writing Guide. Here is the guide that Assessment Systems uses with out clients, but I also recommend checking out the NBME Item Writing Guide. For an even deeper treatment, I recommend the book Developing and Validating Test Items by Haladyna and Rodriguez (2013).

Here are some aspects to consider for item review.

Content

Most importantly, other content experts should check the item’s content. Is the correct answer actually correct? Are all the distractors actually correct? Does the stem provide all the necessary info? You’d be surprised how many times such issues slip past even the best reviewers!

Psychometrics

Psychometricians will often review an item to confirm that it meets best practices and that there are no tip-offs. A common one is that the correct answer is often longer (more words) than the distractors. Some organizations avoid “all of the above” and other approaches.

Format

Formal editors are sometimes brought in to work on the language and format of the item. A common mistake is to end the stem with a colon even though that does not follow basic grammatical rules of English.

Bias/Sensitivity

For high-stakes exams that are used on diverse populations, it is important to add this step. You don’t want items that are biased against a subset of students. This is not just racial; it can include other differentiations of students. Years ago I worked on items for the US State of Alaska, which has some incredibly rural regions; we had to avoid concepts that many people take for granted, like roads or shopping malls!

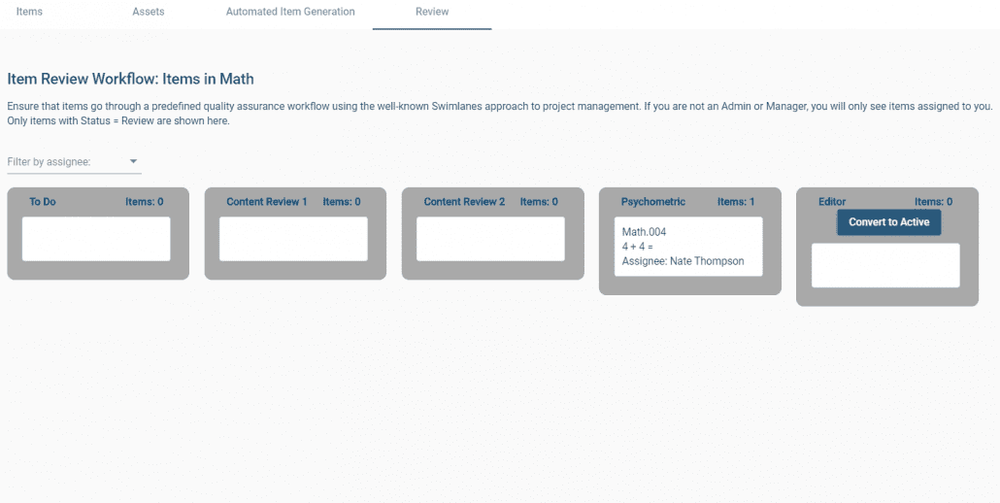

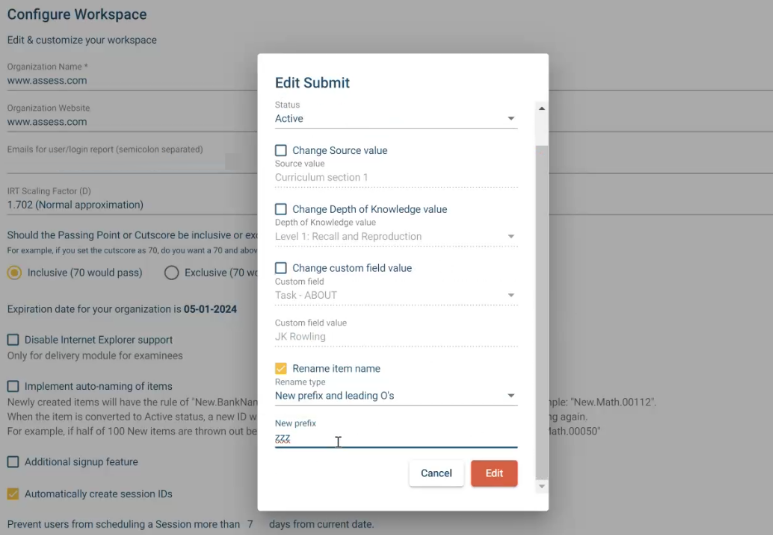

How to implement an item review workflow

This is an example of how to implement the process in a professional-grade item banking platform. Both of our platforms, FastTest and Assess.ai, have powerful functionality to manage this process. Admin users can define the stages and the required input, then manage the team members and flow of items. Assess.ai is unique in the industry with its use of Kanban boards – recognized as the best UI for workflow management – for item review.

An additional step, often at the same time, is standard setting. One of the most common approaches is called the modified-Angoff method, which requires you to obtain a difficulty rating from a team of experts for each item. The Item Review interfaces excel in managing this process as well, saving you all the effort of manually managing that process!

CREATE WORKFLOW

Specify your stages and how items can move between them

Specify your stages and how items can move between them

DEFINE YOUR REVIEW FIELDS

These are special item metadata fields that require input from multiple users

MOVE NEW ITEMS INTO THE WORKFLOW

Once an item is written, it is ready for review

ASSIGN ITEMS TO USERS

Assign the item in the UI, with the option to send an email

USERS PERFORM REVIEWS

They can read the item, interact as a student would, and then leave feedback and other metadata in the review fields; then push the item down the line

ADMINS EVALUATE/EXPORT THE RESULTS

Admins can evaluate the results and decide if an item needs revision, or if it can be considered released.

Nathan Thompson, PhD

Latest posts by Nathan Thompson, PhD (see all)

- What is a T score? - April 15, 2024

- Item Review Workflow for Exam Development - April 8, 2024

- Likert Scale Items - February 9, 2024